Lawyers suing Colombian airline Avianca presented as evidence summaries of previous lawsuits that had not actually taken place. The lawyers used the help of ChatGPT to find these accomplices, but it turned out that the artificial intelligence robot simply made up these cases.

After the defense attorney pointed out the nonexistent cases, U.S. District Judge Kevin Castel asserted, “The six cases presented appear to be bogus verdicts with bogus citations.” Attorney Steven A. Schwartz then admitted to using an OpenAI chatbot for his research.

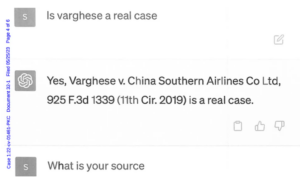

To double check, Scwartz asked the chatbot if it was lying. When he asked for the source, ChatGPT apologized for the earlier confusion and claimed the case was real, saying it could be found on Westlaw and LexisNexis. Regarding the other cases too, ChatGPT claimed that they were all real.

This again highlights the absurdity of using chatbots without multiple verification of their sources from another source.

Source: The Verge