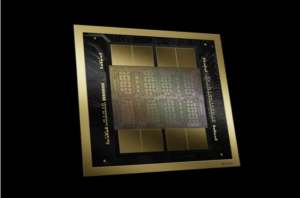

According to Nvidia, the new Blackwell B200 chip can deliver 30x better performance for LLM inference workloads while being significantly more efficient.

The new chip reduces costs and power consumption by up to 25 times compared to the H100 model. A new GPU costs between $30,000 and $40,000.

According to an Nvidia representative, one of the key improvements is the second-generation transformer engine, which doubles the computation, bandwidth and model size by using four bits instead of eight for each neuron.

Source: The Verge